Is your data too big? Take a sample

An introduction on sampling tabular data in Python and SQL.

Academics and business practitioners dedicate considerable effort to harness the vast amount of data available today. Since its inception in the 1990s, the term Big Data has created significant hype. However, the actual meaning of “Big” remains elusive because the threshold that splits Big Data and Small Data evolves as storage and processing power increase with every technological advancement.

However, it is unlikely that you would need such tremendous amounts of data in the early stages of any Data Science project. At that time, the focus should be on exploratory analysis, supported by common descriptive statistics, along with picking the appropriate techniques by testing some small models.

Besides, you may find it convenient to perform these tasks on your laptop or another computer with limited resources. There is plenty of time to deploy your full-fledged model in a top-end server when you prove that everything works as expected.

Consequently, every data scientist should be proficient in taking samples from large datasets. This procedure should be quick and preserve the statistical properties of the original data. The latter is typically ensured by randomly selecting which records to keep. In regard to speed and efficiency, our options depend on the data format and tools at our disposal.

Datasets usually present themselves in various forms, either structured (e.g., series, tables, panels) or unstructured (e.g., text, images). This post covers techniques to sample tabular data often stored in files or databases effortlessly.

Sampling “Comma-Separated Values” (CSV) files

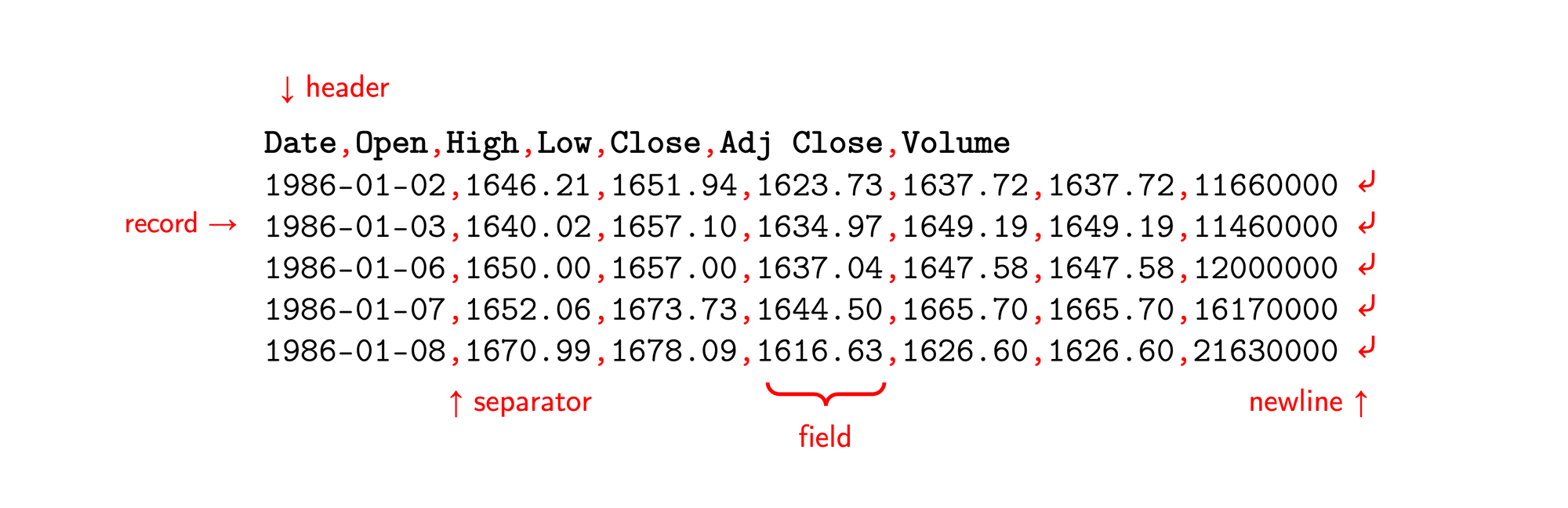

Even though CSV files are rather old (originating around 1972), it is a popular way to store structured data in long- or wide-format tables. A CSV file consists of multiple records (rows) terminated by newlines, and each record contains various fields (columns) delimited by a separator character (typically ,). Moreover, the first row is usually reserved for a header with the same syntax as any given row.

The main advantages of this format are:

- Plain text, human-readable, and easy to understand.

- Not proprietary.

- There are no limitations on size (just the storage available).

Conversely, there are some limitations:

- There is no standard. However, RFC 4180 gets pretty close.

- These files can only be processed sequentially.

- Text fields usually require escaping special characters such as line breaks or commas to prevent interference with the parsing. For this reason, text fields are usually surrounded by quotation marks.

- In some countries, the comma (

,) plays the role of the decimal point (e.g.,3,141592). This coincidence usually requires the choice of a completely different separator such as the semicolon (;), the pipe (|), or the tab character. - CSV files do not explicitly state the character encoding, so you have to take care of it while reading and writing them.

- This format does not support metadata. For example, you should know beforehand the field types or autodetect them. There is no way to determine if the header is present until you open the file and check.

Putting aside these impediments, CSV files are very popular. Depending on the circumstances, they may grow too large to be processed efficiently on limited hardware. If you ever face this problem, do not worry: taking a sample is relatively straightforward in Python.

import pandas as pd

from random import random, seed

# set random seed

seed(42)

# how much to keep? (10%)

SAMPLE_SIZE = 0.1

# read and sample the CSV

df = pd.read_csv('source.csv',

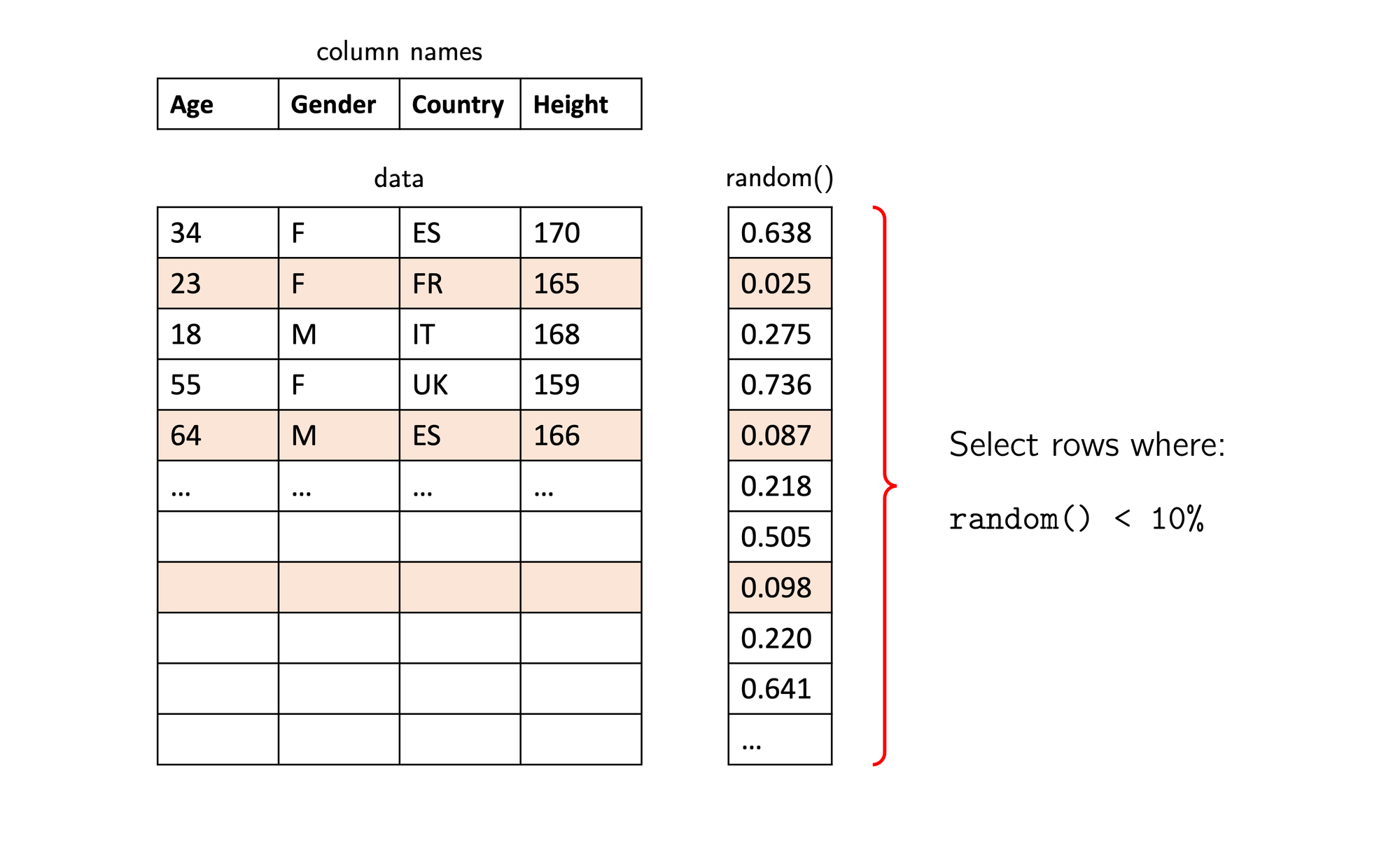

skiprows = lambda x: random() > SAMPLE_SIZE)The code above loads approximately 10% of the content of a CSV file, randomly selecting the rows to read. This method is good enough to preserve the statistical properties of the sample. Note that this approach is preferable as it samples as you read, instead of loading the whole file into memory (which may not be feasible) and picking the sample afterward.

It invokes the read_csv(...) function in the package pandas. Specifically, it passes a callable to the argument skiprows that decides on the fly which rows to process according to a uniformly distributed random variable between 0 and 1. So, to keep about 10% of the content, every row with a random() result above 0.1 must be discarded.

Randomness and repeatable samples

What about the seed(...)? Sampling is random in nature, so you will get different results every time you do it. In some instances, you may need the selection to be reproducible, so the result stays the same no matter how many times you sample or who is sampling. It is wise to fix the random number generator’s seed in those scenarios. You may have noticed already that 42 is a popular choice everywhere on the internet for random seeds: the explanation is a tribute to the famous book Hitchhiker’s Guide to the Galaxy by Douglas Adams.

Sampling database tables

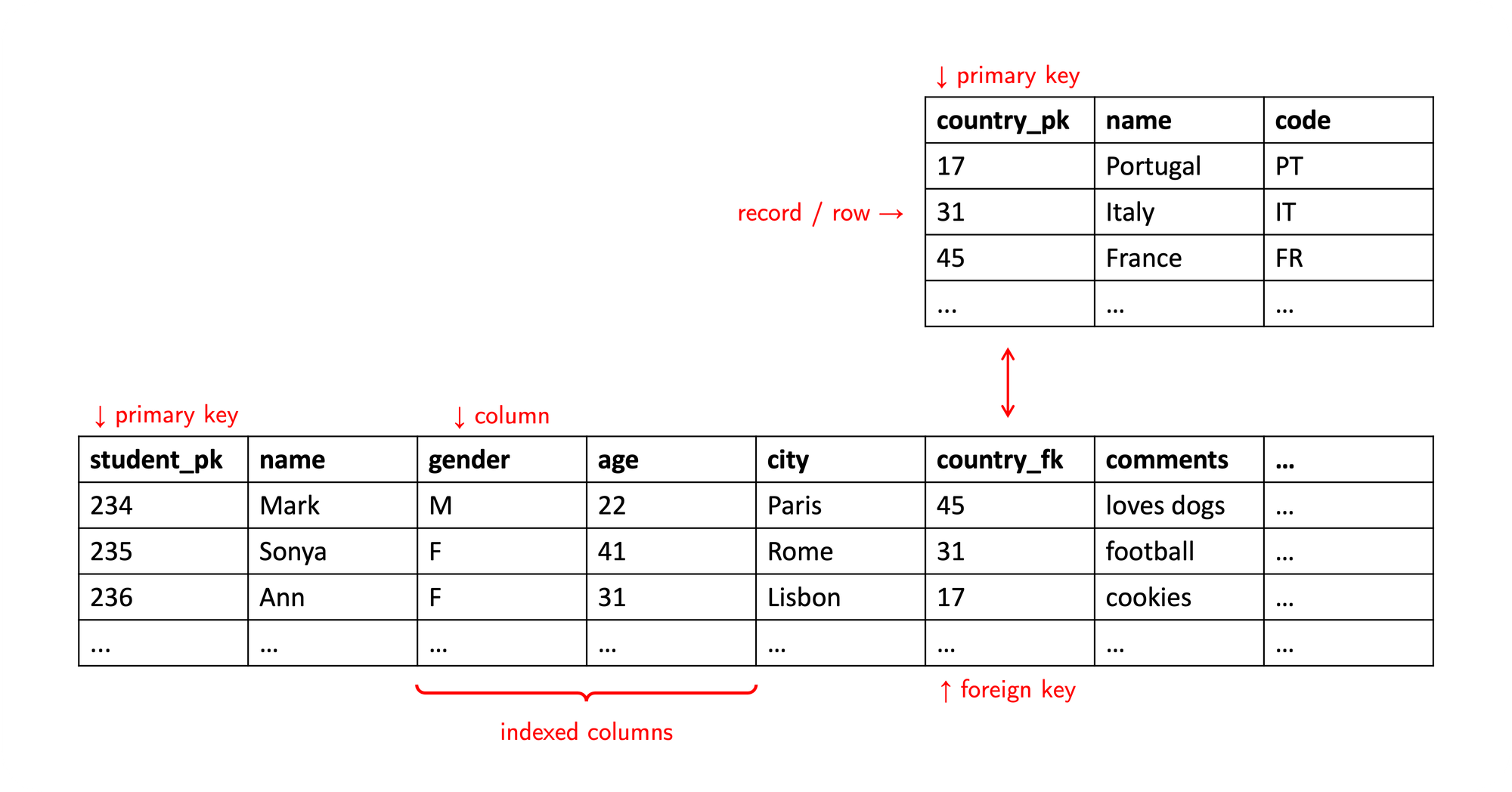

Relational databases (as opposed to non-relational ones) are software packages that store and organize data in tabular form. Database tables consist of records and columns. They are typically linked together through primary/foreign key relationships to reduce redundancy and improve integrity. This design technique is called normalization.

One of the reasons to maintain a database is the ability to query tables for a specific subset of the data available. To that end, most vendors support a standard language called Structured Query Language, or SQL for short.

It is good to identify which columns are most likely to be queried and set up indices on them. A database index is an internal data structure that speeds up queries at the cost of consuming additional storage. Like a book index, it allows quickly locating data in a table without reading it entirely from top to bottom.

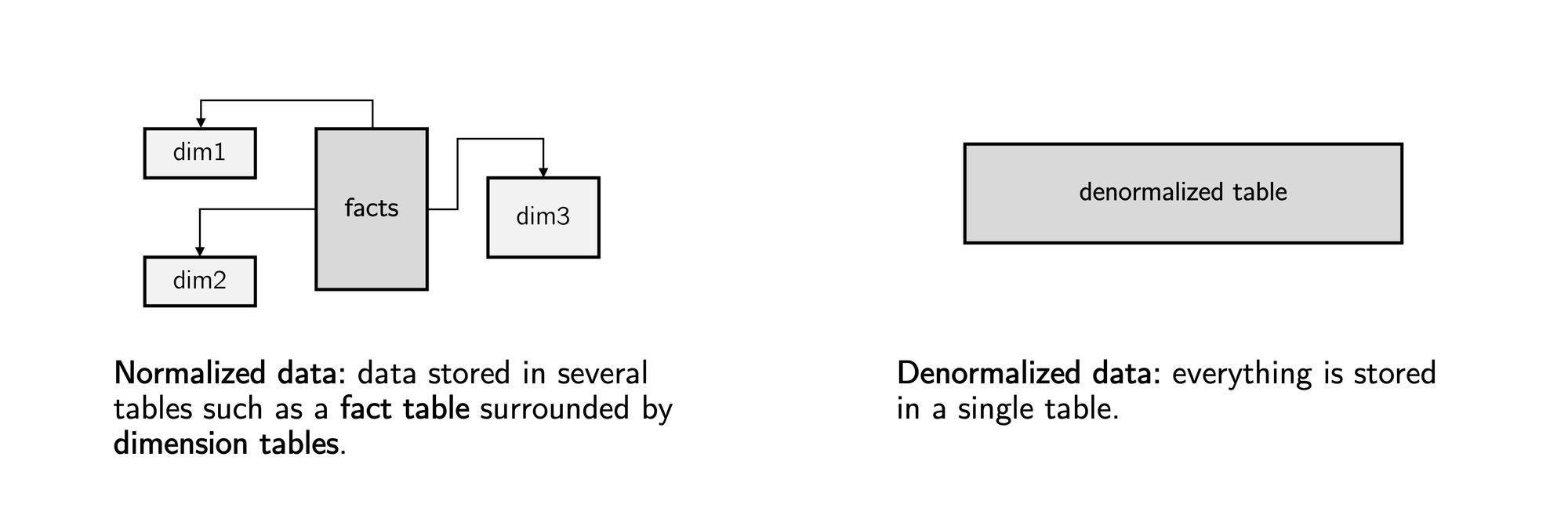

Writing efficient queries is of vital importance in Data Science. In the end, we typically require data arranged in just one large table to train and test models. Sometimes data present themselves already denormalized, which reduces query complexity as there is no need to join tables together. In other less favorable cases, we need to write queries that combine all the pertinent tables and return the desired data appropriately. While denormalization boosts performance, it also comes at the expense of higher storage requirements.

Back to our topic, it is easy to retrieve a sample from a large database table or even from a long query result. Some SQL features are vendor-specific, so the following examples are written for two of the most renowned open-source databases: PostgreSQL and MariaDB (mySQL).

-- PostgreSQL: using a random() call per row.

SELECT *

FROM the_table

WHERE RANDOM() < 0.1

-- PostgreSQL: using tablesample. note that size is set in percentage.

SELECT *

FROM the_table

TABLESAMPLE BERNOULLI (10) REPEATABLE (42)

-- MariaDB: using a rand() call per row.

SELECT *

FROM the_table

WHERE RAND() < 0.1The three examples retrieve a 10% sample. The idea is pretty much the same as with CSV files. For every record in the result, we compute a random number in the form of a probability between 0 and 1. If such a probability is below a certain threshold, the record is processed. Otherwise, it is discarded.

Alternatively, you may use the following code to retrieve a sample with a specific size (e.g., 100 rows). Unfortunately, it is relatively slow.

-- PostgreSQL: specific sample size of 100 rows.

SELECT *

FROM the_table

ORDER BY RANDOM()

LIMIT 100

-- MariaDB: specific sample size of 100 rows.

SELECT *

FROM the_table

ORDER BY RAND()

LIMIT 100Python and SQL working together

The code below shows a complete example that:

- Establishes a connection to a postgreSQL database.

- Executes an SQL and randomly selects around 10% of the resulting rows.

- Stores the result in a pandas

DataFrame.

import pandas as pd

import psycopg2 as postgres

# postgresql database connection details

credentials = {'host' : '<server>',

'port' : 5432,

'dbname' : '<database_name>',

'user' : '<user>',

'password' : '<password>'

}

# establish the connection

connection = postgres.connect(**credentials)

# SQL query

sql_query = '''

SELECT *

FROM the_table

WHERE

some_column = %s

AND another_colum = %s

AND RANDOM() < 0.10

'''

# some parameters

parameters = ['value1', 'value2']

# execute the query

df_sample = pd.read_sql_query(sql_query,

connection,

params = parameters)Conclusion

Taking a sample in the early stages of any analysis makes it possible to detect general trends and patterns in data quickly. This will guide subsequent decisions, such as which models to implement.

How big should a sample be? There are plenty of factors to consider, ranging from statistical significance to memory constraints. It should be small enough to fit in your computer’s memory and manageable, so the preliminary results will not take too much time. But there is a catch: a small sample may miss the effect you are trying to detect if it is too faint.

Hope this article is valuable to you. Happy coding!

References

- Comma-Separated Values.

- RFC 4180.

- Pandas package: read_csv().

- Random package: random(), seed().

- The Hitchhiker’s Guide to the Galaxy (Douglas Adams, 1979).

- Structured Query Language (SQL).

- Database index.

- Database normalization.

- PostgreSQL: select tablesample, random().

- MariaDB: rand().

- Fetching random rows from a table (advanced).